Shards: share logic #

Other cases are better solved by extracting the logic that manipulates multiple shards:

- Splitting a service (as discussed above) yields a component that represents both shared data and shared logic.

- Adding a Middleware lets the shards communicate with each other without keeping direct connections. It also may do housekeeping: error recovery, replication, and scaling.

- A Sharding Proxy hides the existence of the shards from clients.

- An Orchestrator calls (or messages) multiple shards to serve a user request. That relieves the shards of the need to coordinate their states and actions by themselves.

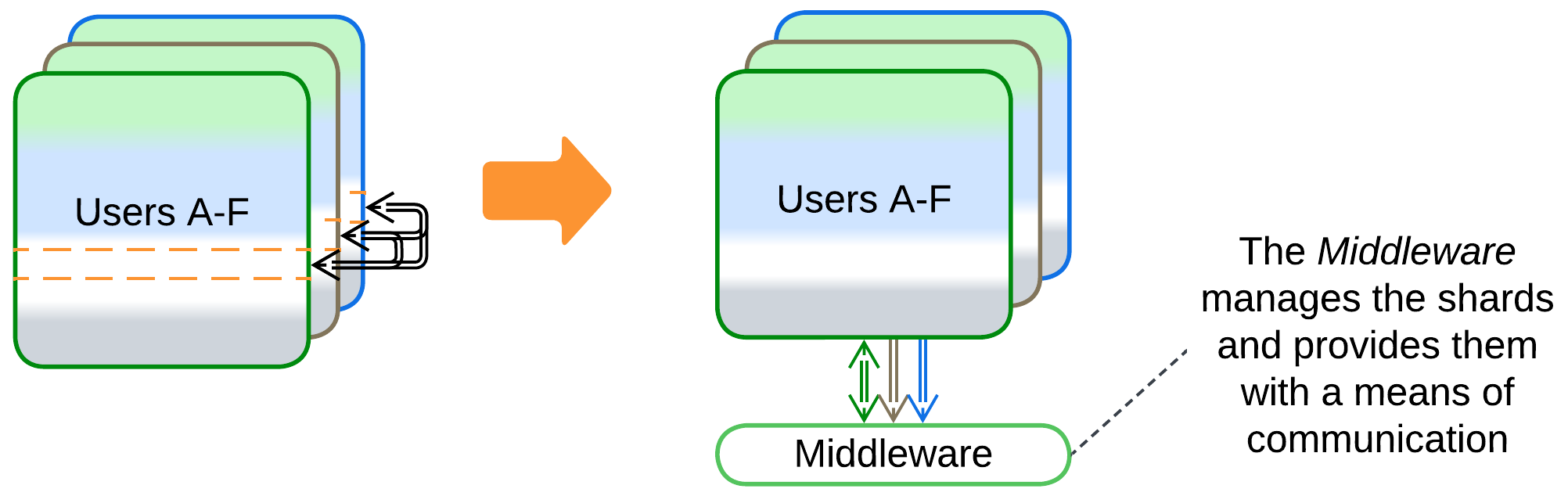

Add a Middleware #

Patterns: Shards, Middleware, Layers.

Goal: simplify communication between shards, their deployment, and recovery.

Prerequisite: many shards need to exchange information, some may fail.

A Middleware transports messages between shards, checks their health and recovers ones which have crashed. It may manage data replication and deployment of software updates as well.

Pros:

- The shards become simpler because they don’t need to track each other.

- There are many good third-party implementations.

Cons:

- Performance may degrade.

- Components of the Middleware are new points of failure.

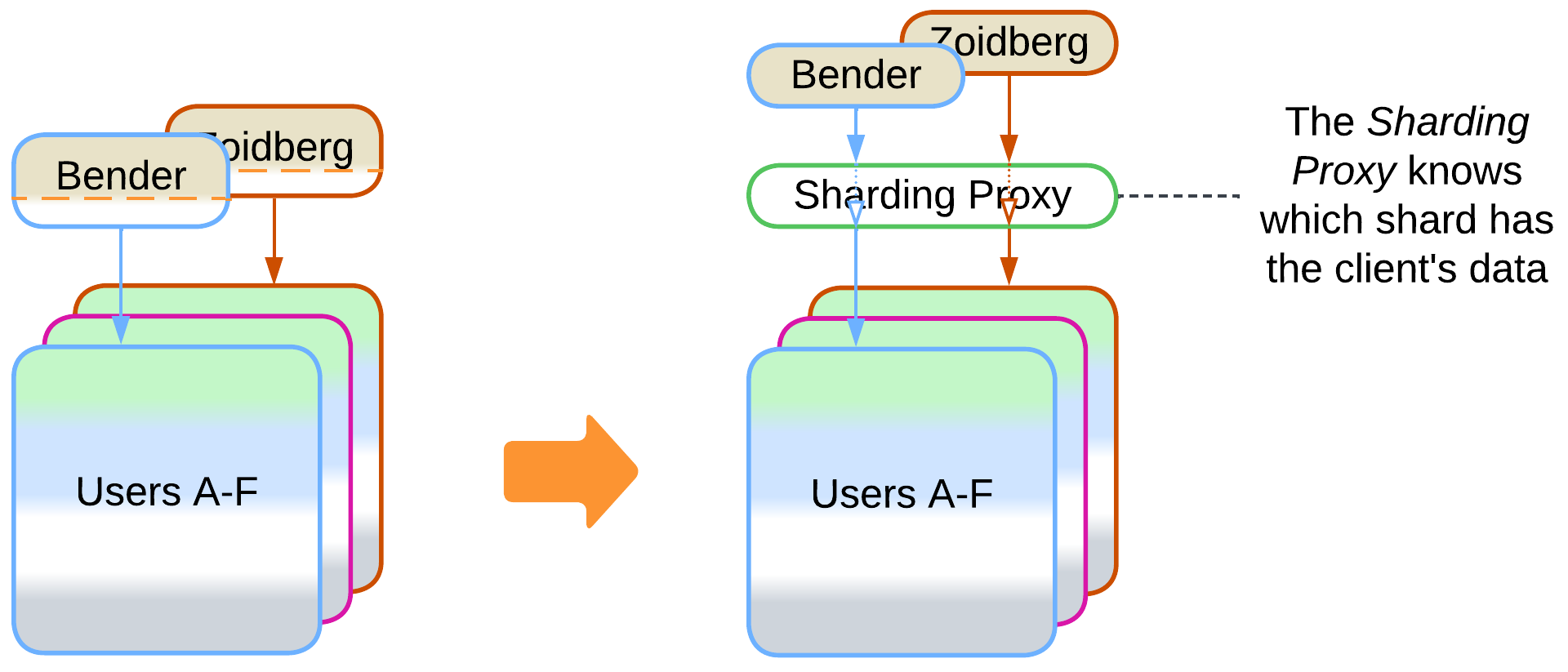

Add a Sharding Proxy #

Patterns: Shards, Sharding Proxy (Proxy), Layers.

Goal: simplify the code on the client side, hide your implementation from clients.

Prerequisite: each client connects directly to the shard which owns their data.

The client application may know the address of the shard which serves it and connect to it without intermediaries. That is the fastest means of communication, but it prevents you from changing the number of shards or other details of your implementation without updating all the clients, which may be unachievable. An intermediary may help.

Pros:

- Your system becomes isolated from its clients.

- You can put generic aspects into the Proxy instead of implementing them in the shards.

- Proxies are readily available.

Cons:

- The extra network hop increases latency unless you deploy the Sharding Proxy as an Ambassador [DDS] co-located with every client, which brings back the issue of client software updates.

- The Sharding Proxy is a single point of failure unless replicated.

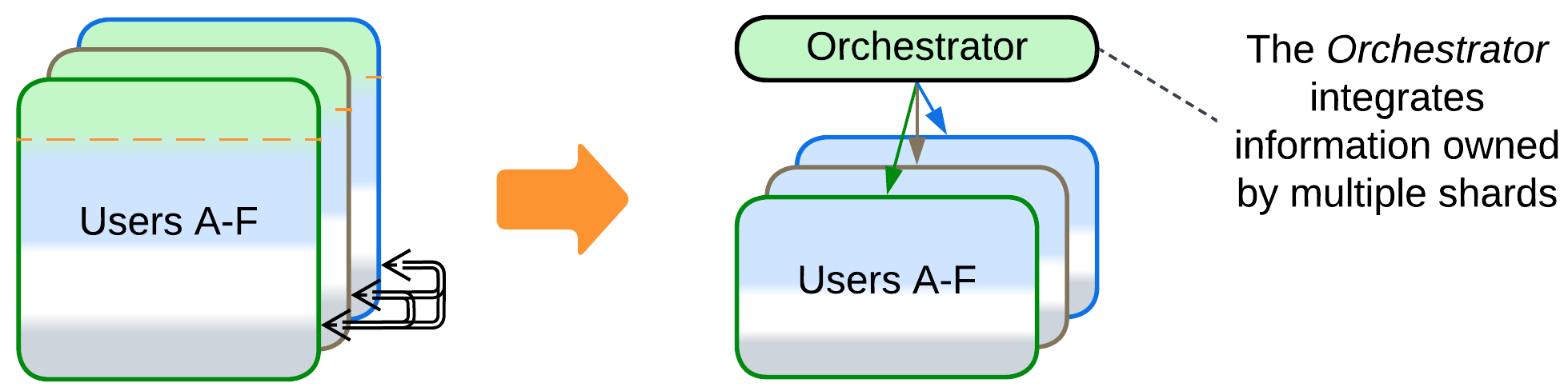

Move the integration logic into an Orchestrator #

Patterns: Shards, Orchestrator, Layers.

Goal: isolate the shards from awareness of each other.

Prerequisite: the shards are coupled via their high-level logic.

When a high-level scenario uses multiple shards (Scatter-Gather and MapReduce are the simplest examples), the way to follow is to extract all such scenarios into a dedicated stateless module. That makes the shards independent of each other.

Pros:

- The shards don’t have to be aware of each other.

- The high-level logic can be written in a high-level language by a dedicated team.

- The high-level logic can be deployed independently.

- The main code should become much simpler.

Cons:

- Latency will increase.

- The Orchestrator becomes a single point of failure with a good chance to corrupt your data.

Further steps:

- Shard or replicate the Orchestrator to support higher load and to remain online if it fails.

- Persist the Orchestrator (give it a dedicated database) to make sure that it does not leave half-committed transactions upon failure.

- Divide the Orchestrator into Backends for Frontends or a SOA-style layer if you have multiple kinds of clients or workflows, correspondingly.