Proxy #

Should I build the wall? A layer of indirection between your system and its clients.

Known as: Proxy [GoF].

Aspects:

- Routing,

- Offloading.

Variants:

By transparency:

- Full Proxy,

- Half-Proxy.

By placement:

By function:

- Firewall / (API) Rate Limiter / API Throttling,

- Response Cache / Read-Through Cache / Write-Through Cache / Write-Behind Cache / Cache [DDS] / Caching Layer [DDS] / Distributed Cache / Replicated Cache,

- Load Balancer [DDS] / Sharding Proxy [DDS] / Cell Router / Messaging Grid [FSA] / Scheduler,

- Dispatcher [POSA1] / Reverse Proxy / Ingress Controller / Edge Service / Microgateway,

- Adapter [GoF, DDS] / Anticorruption Layer [DDD] / Open Host Service [DDD] / Gateway [PEAA] / Message Translator [EIP, POSA4] / API Service / Cell Gateway / (inexact) Backend for Frontend / Hardware Abstraction Layer (HAL) / Operating System Abstraction Layer (OSAL) / Platform Abstraction Layer (PAL) / Database Abstraction Layer (DBAL or DAL) / Database Access Layer [POSA4] / Data Mapper [PEAA] / Repository [PEAA, DDD].

- (with Orchestrator) API Gateway [MP].

See also Backends for Frontends (a Gateway per client type).

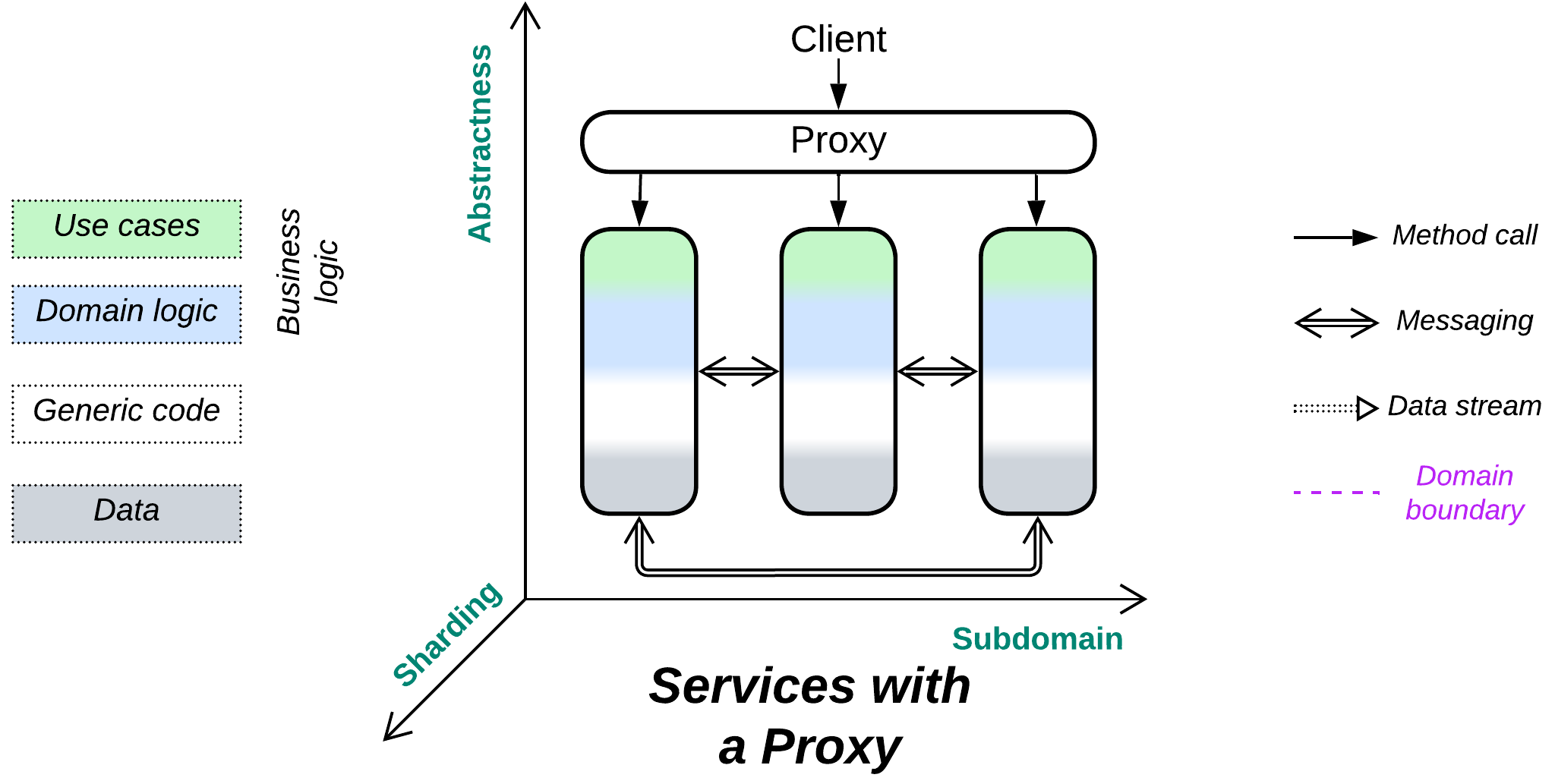

Structure: A layer that pre-processes and/or routes user requests.

Type: Extension.

| Benefits | Drawbacks |

|---|---|

| Separates cross-cutting concerns from the services | A single point of failure |

| Decouples the system from its clients | Most proxies degrade latency |

| Low attack surface | |

| Available off the shelf |

References: Half of [DDS] is about the use of Proxies. See also: [POSA4] on Proxy; Chris Richardson and Microsoft on API Gateway; Martin Fowler on Gateway, Facade and API Gateway.

A Proxy stands between a (sub)system’s implementation and its users. It receives a request from a client, does some pre-processing, then forwards the request to a lower-level component. In other words, a Proxy encapsulates selected aspects of the system’s communication with its clients by serving as yet another layer of indirection. It may also decouple the system’s internals from changes in the public protocol. The main functions of a proxy include:

- Routing – a Proxy tracks addresses of deployed instances of the system’s components and is able to forward a client’s request to the shard or service which can handle it. Clients need to know only the public address of the Proxy. A Proxy may also respond on its own if the request is invalid or there is a matching response in the Proxy’s cache.

- Offloading – a Proxy may implement generic aspects (cross-cutting concerns) of the system’s public interface, such as authentication, authorisation, encryption, request logging, web protocol support, etc. which would otherwise need to be implemented by the underlying system components. That allows for the services to concentrate on what you write them for – the business logic.

Performance #

Most kinds of proxies trade latency (the extra network hop) for some other quality:

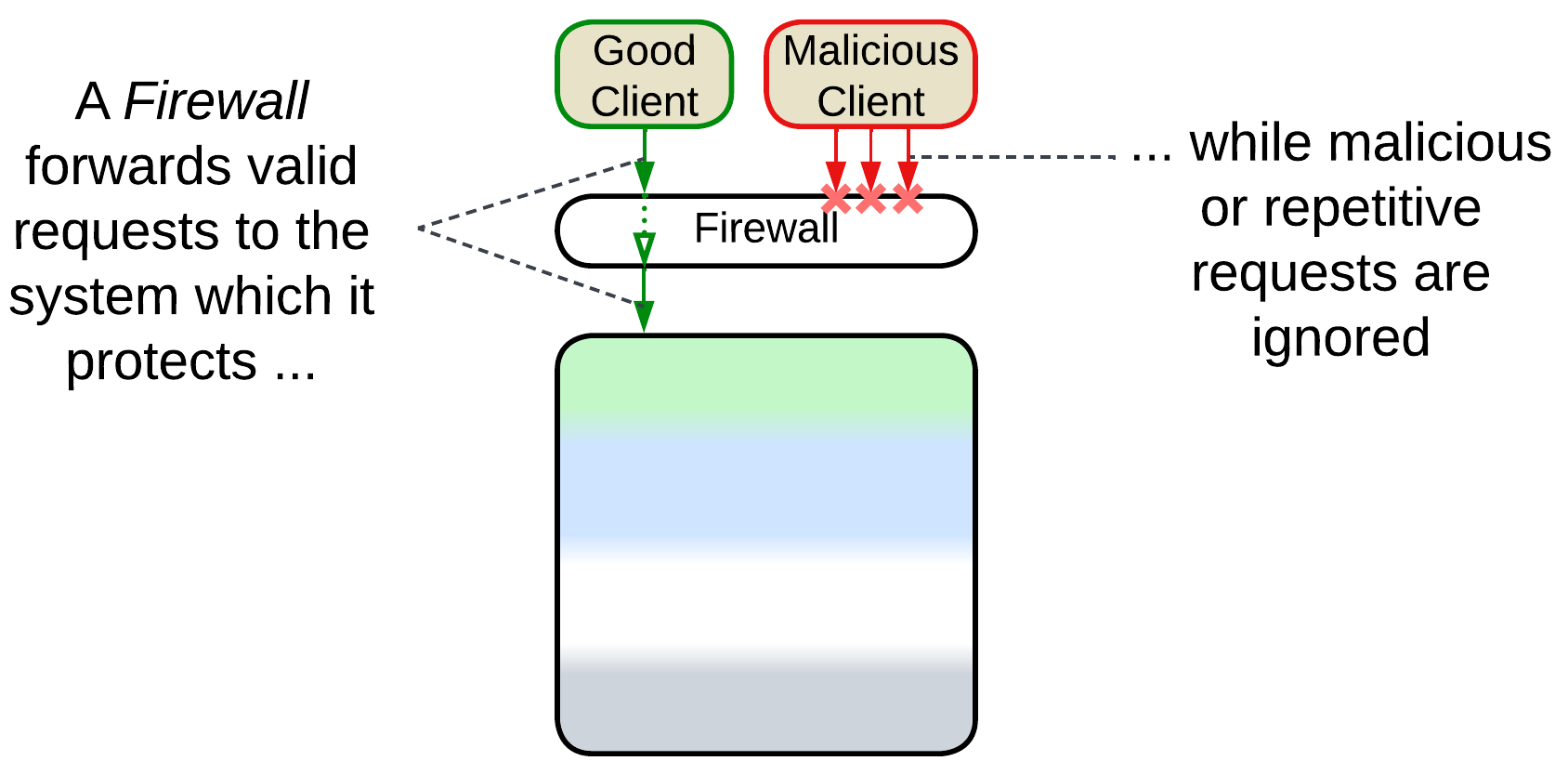

- A Firewall slows down processing of good requests but protects the system from attacks.

- Both a Load Balancer and a Dispatcher allow for the use of multiple servers (with identical or specialized components, correspondingly) to improve the system’s throughput but they still add to the minimum latency.

- An Adapter adds compatibility but its latency cost is higher than with other Proxies as it not only forwards the original message but also changes its payload – an activity which involves data processing and serialization.

A Cache is a bit weird in that respect. It improves latency and throughput for repeated requests but degrades latency for unique ones. Furthermore, it is often colocated with some other kind of Proxy to avoid the extra network hop between the Proxies, which makes caching almost free in terms of latency.

Dependencies #

Proxies widely vary in their functionality and level of intrusiveness. The most generic proxies, like Firewalls, may not know anything about the system or its clients. A Response Cache or Adapter must parse incoming messages, thus it depends on the communication protocol and message format. A Load Balancer or Dispatcher is aware of both the protocol and system composition.

In fact, because Proxies tend to have their dependencies configured on startup or through their APIs, there is no need to modify the code of a Proxy each time something changes in the underlying system.

Applicability #

Proxy helps with:

- Multi-component systems. Having multiple types and/or instances of services means there is a need to know the components’ addresses to access them. A Proxy encapsulates that knowledge and may also provide other common functionality as an extra benefit.

- Dynamic scaling or sharding. The Proxy both knows the system’s structure (the address of each instance of a service) and delivers user requests, thus it is the place to implement sharding (when a service instance is dedicated to a subset of users) or load balancing (when any service instance can serve any user) and even manage the size of the service instance Pool.

- Multiple client protocols. When the Proxy is the endpoint for the system’s users it may translate multiple external (user-facing) protocols into a unified internal representation.

- System security. Though a Proxy does not make a system more secure, it takes away the burden of security considerations from the services which implement the business logic, improving the separation of concerns and making the system components more simple and stupid. An off-the-shelf Proxy may be less vulnerable compared to in-house services (but don’t disregard security through obscurity!).

Proxy hurts:

- Critical real-time paths. It adds an extra hop in request processing, increasing latency for thoroughly optimized use cases. Such requests may need to bypass Proxies.

Relations #

Proxy:

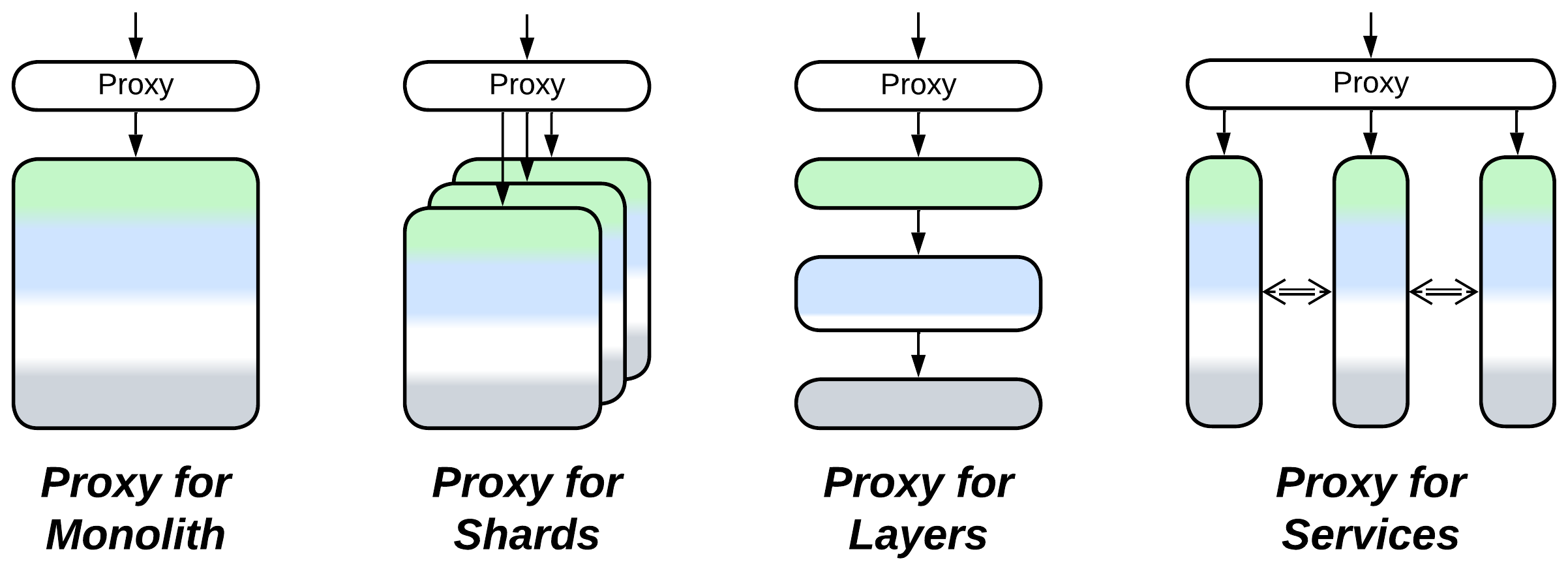

- Extends Monolith or Layers (forming Layers), Shards, or Services.

- Can be extended by another Proxy or merged with an Orchestrator into an API Gateway.

- At least one Proxy per service is employed by Message Bus, Enterprise Service Bus, Service Mesh, and Hexagonal Architecture.

- Is a special case (when there is a single kind of client) of Backends for Frontends.

Variants by transparency #

A Proxy may either fully isolate the system it represents or merely help establish connections between clients and servers. This resembles closed and open layers because a Proxy is a layer between a system and its clients.

Full Proxy #

A Full Proxy processes every message between the system and its clients. It completely isolates the system and may meddle with the protocols but it is resource-heavy and adds to latency. Adapters and Response Caches are always Full Proxies.

Half-Proxy #

A Half-Proxy intercepts, analyzes, and routes the session establishment request from a client but then goes out of the loop. It may still forward the subsequent messages without looking into their content or it may even help connect the client and server directly, which is known as direct server return (DSR). This approach is faster and much less resource-hungry but is also less secure and less flexible than that of Full Proxy. A Firewall, Load Balancer, or Reverse Proxy may act as a Half-Proxy. IP telephony servers often use DSR: the server helps call parties find each other and establish direct media communication.

Variants by placement #

As a Proxy stands between a (sub)system and its client(s), we can imagine a few ways to deploy it:

Separate deployment: Standalone #

We can deploy a Proxy as a separate system component. This has the downside of an extra network hop (higher latency) in the way of every client’s request to the system and back but that is unavoidable in the following cases:

- The Proxy uses a lot of system resources, thus it cannot be colocated with another component. This mostly affects Firewall and Cache.

- The Proxy is stateful and deals with multiple services, which is true for a Load Balancer, Reverse Proxy, or API Gateway.

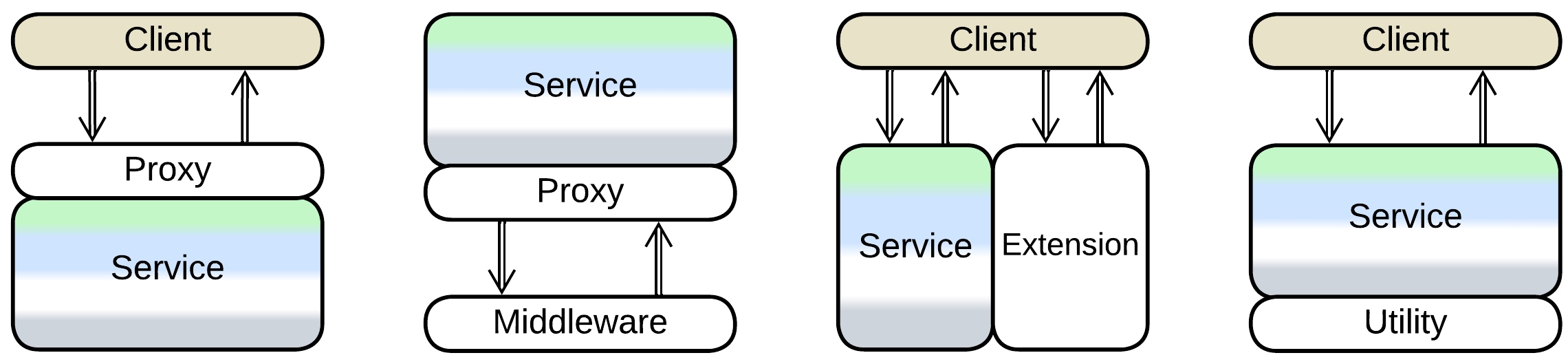

On the system side: Sidecar #

We can often co-locate a Proxy with our system when the latter is not distributed. That avoids the extra network delay, traffic, operational complexity and does not add any new hardware which can fail at the most untimely moments. Such a placement is called Sidecar [DDS] and it is mostly applicable to Adapters.

It should be noted that Sidecar – co-locating a generic component and business logic – is more of a DevOps approach than an architectural pattern, thus we can see it used in a variety of ways [DDS]:

- As a Proxy between a component and its clients.

- As an extra service that provides observability or configures the main service.

- As a layer with general-purpose utilities.

- As an Adapter for Middleware.

Service Mesh (Middleware for Microservices) makes heavy use of Sidecars.

On the client side: Ambassador #

Finally, a Proxy may be co-located with a component’s clients, making it an Ambassador [DDS]. Its use cases include:

- Low-latency systems with stateful shards – each client should access the shard that has their data, which the Proxy knows how to choose.

- Adapters that help client applications use an optimized or secure protocol.

Variants by function #

Proxies are ubiquitous in backend systems as using one or several of them frees the underlying code from the need to provide boilerplate non-business-logic functionality. It is common to have several kinds of Proxies deployed sequentially (e.g. API Gateways behind Load Balancers behind a Firewall) with many of them pooled to improve performance and stability. It is also possible to employ multiple kinds of Proxies, each serving its own kind of client, in parallel, resulting in Backends for Frontends.

As Proxies are used for many purposes, there are a variety of their specializations and names. Below is a very rough categorization, complicated by the fact that real-world Proxies often implement several categories at once.

For example, NGINX claims to be: an HTTP web server, Reverse Proxy, content Cache, Load Balancer, TCP/UDP Proxy server, and mail Proxy server – all at once.

Firewall, (API) Rate Limiter, API Throttling #

The Firewall is a component for white- and black-listing network traffic, mostly to protect against attacks. It is possible to use both generic hardware Firewalls on the external perimeter for brute force (D)DoS protection and more complex access rules at a second layer of software Firewalls to protect critical data and services from unauthorized access.

Rate Limiting makes sure that no single client uses too much of the system’s resources – it sets a limit on how many requests from a single source the system can process over a unit of time. Any requests over the limit are rejected.

Throttling differs from Rate Limiting in that over-the-limit requests are queued for later processing, effectively slowing down communication with aggressive clients.

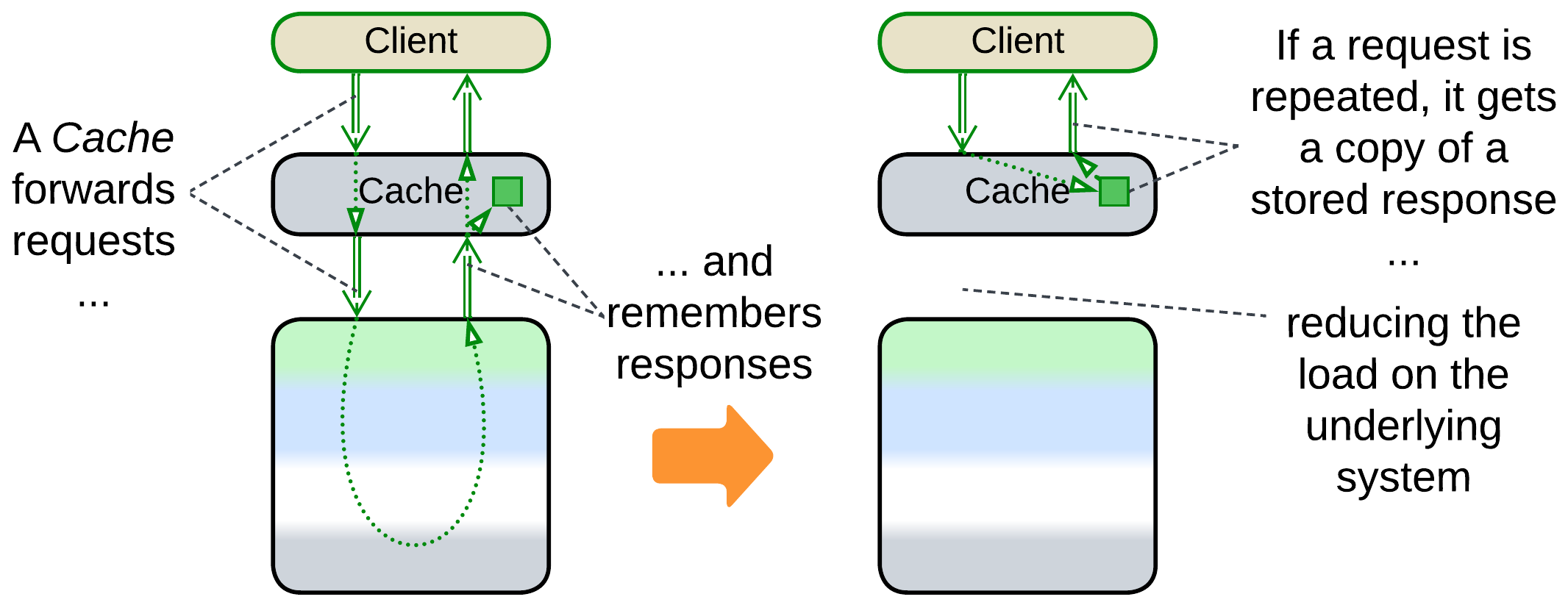

Response Cache, Read-Through Cache, Write-Through Cache, Write-Behind Cache, Cache, Caching Layer, Distributed Cache, Replicated Cache #

If a system often gets identical requests, it is possible to remember its responses to most frequent of them and return the cached response without fully re-processing the request. The real thing is more complicated because users tend to change the data which the system stores, necessitating a variety of cache refresh policies. A Response Cache may be co-located with a Load Balancer or it may be [DDS] sharded (each Cache processes a unique subset of requests) and/or replicated (all the Caches are similar) and thus require a Load Balancer of its own.

It is called Response Cache because it stores the system’s responses to requests of its users or just Cache [DDS] because it is the most common kind of Cache in system architecture.

If the cached subsystem is a database, we can discern between read and write requests:

- Read-Through Cache is when the Cache is updated on a miss for a read request but is transparent to or invalidated by write requests.

- Write-Through Cache is when the Cache is updated by write requests that pass through it.

- Write-Behind is when the Cache aggregates multiple write requests to later send them to the database as a batch, saving bandwidth and possibly merging multiple updates of the same key.

It is possible to combine multiple servers into a virtual Caching Layer [DDS]:

- In the simplest case, which does not require any additional instrumentation aside from a Load Balancer, the instances of the Caches are independent and may return stale results.

- In a Distributed Cache, driven by a Sharding Proxy, every server (shard) holds a subset of the cached data, thus allowing for caching datasets which don’t fit in a single computer’s memory.

- In a Replicated Cache the datasets of all the servers are identical and synchronized on any modification. This scales the cache’s throughput but requires a kind of synchronization engine, like a Data Grid.

Load Balancer, Sharding Proxy, Cell Router, Messaging Grid, Scheduler #

Here we have a hardware or software component which distributes user traffic among multiple instances of a service:

- A Sharding Proxy [DDS] selects a shard based on specific data which is present in a request (OSI level 7 request routing) for a system where each shard owns a part of the system’s state, therefore only one (or a few for replicated shards) of the shards has the data required to process the client’s request.

- A Load Balancer [DDS] for a Pool of stateless instances or Replicas, or a Messaging Grid [FSA] of Space-Based Architecture evenly distributes the incoming traffic over identical request processors (OSI level 4 load balancing) to protect any instance of the underlying system from overload. In some cases it needs to be session-aware (process OSI level 7) to assure that all the requests from a client are forwarded to the same instance of the service [DDS].

- It may forward read requests to Read-Only Replicas of the data while write requests are sent to the master database (CQRS-like behavior).

- A Cell Router chooses a data center which is the closest to the user’s location.

Load Balancers are very common in high-load backends. High-availability systems deploy multiple instances of a Load Balancer in parallel to remain functional if one of the Load Balancers fails. CPU-intensive applications (like 3D games) often post asynchronous tasks for execution by Thread Pools under the supervision of a Scheduler. A similar pattern is found in OS kernels and fiber or actor frameworks where a limited set of CPU-affined threads is scheduled to run a much larger number of tasks.

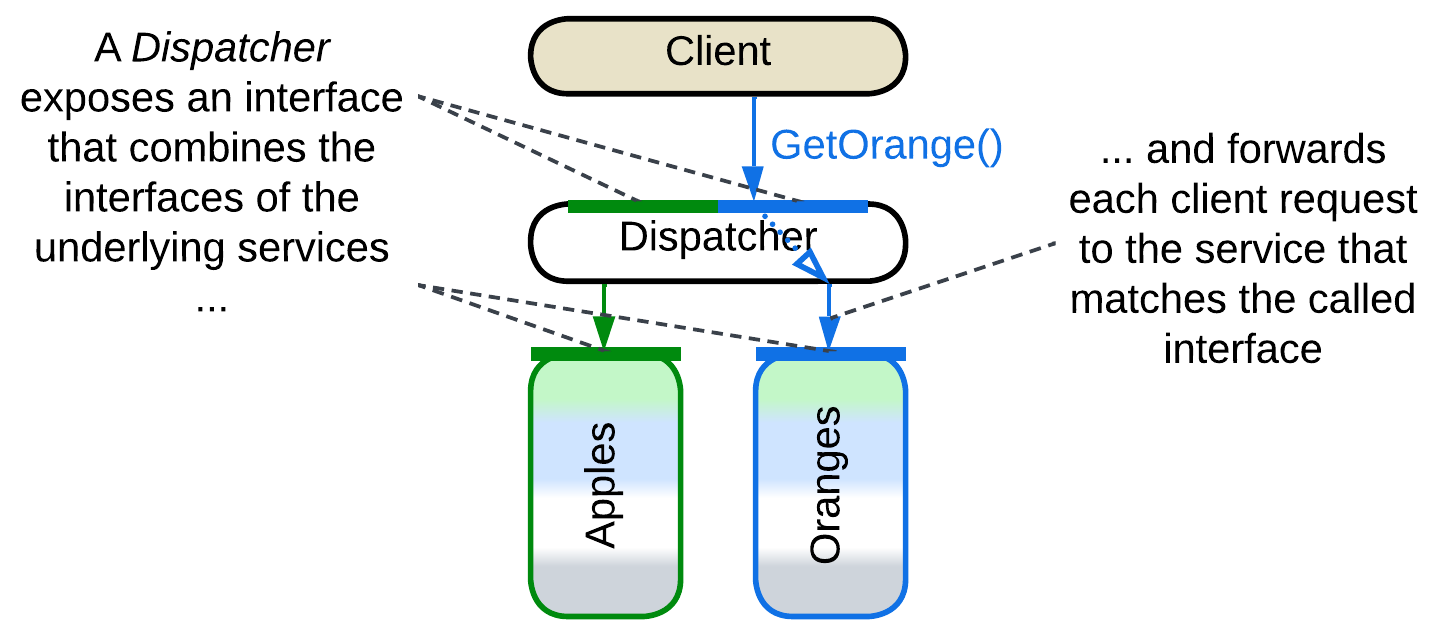

Dispatcher, Reverse Proxy, Ingress Controller, Edge Service, Microgateway #

The Reverse Proxy, Ingress Controller, Edge Service, or Microgateway is a router that stands between the Internet and the organization’s internal network. It allows clients to use a public address for the system without knowing how and where their requests are processed. It parses user requests and forwards them to an internal server based on the requests’ body. A Reverse Proxy can be extended with a firewall, SSL termination, load balancing, and caching functionality. Examples include Nginx.

Dispatcher [POSA1] is a similar component for a single-process application. It serves a complex command line interface by receiving and preprocessing user commands only to forward each command to a module which knows how to handle it. The modules may register their commands with the Dispatcher at startup or there may be a static dispatch table in the code.

You could have noticed that Dispatcher or Reverse Proxy is quite similar to Load Balancer or Sharding Proxy – they differ mostly in what kind of system lies below them: Services or Shards.

Adapter, Anticorruption Layer, Open Host Service, Gateway, Message Translator, API Service, Cell Gateway, (inexact) Backend for Frontend, Hardware Abstraction Layer (HAL), Operating System Abstraction Layer (OSAL), Platform Abstraction Layer (PAL), Database Abstraction Layer (DBAL or DAL), Database Access Layer, Data Mapper, Repository #

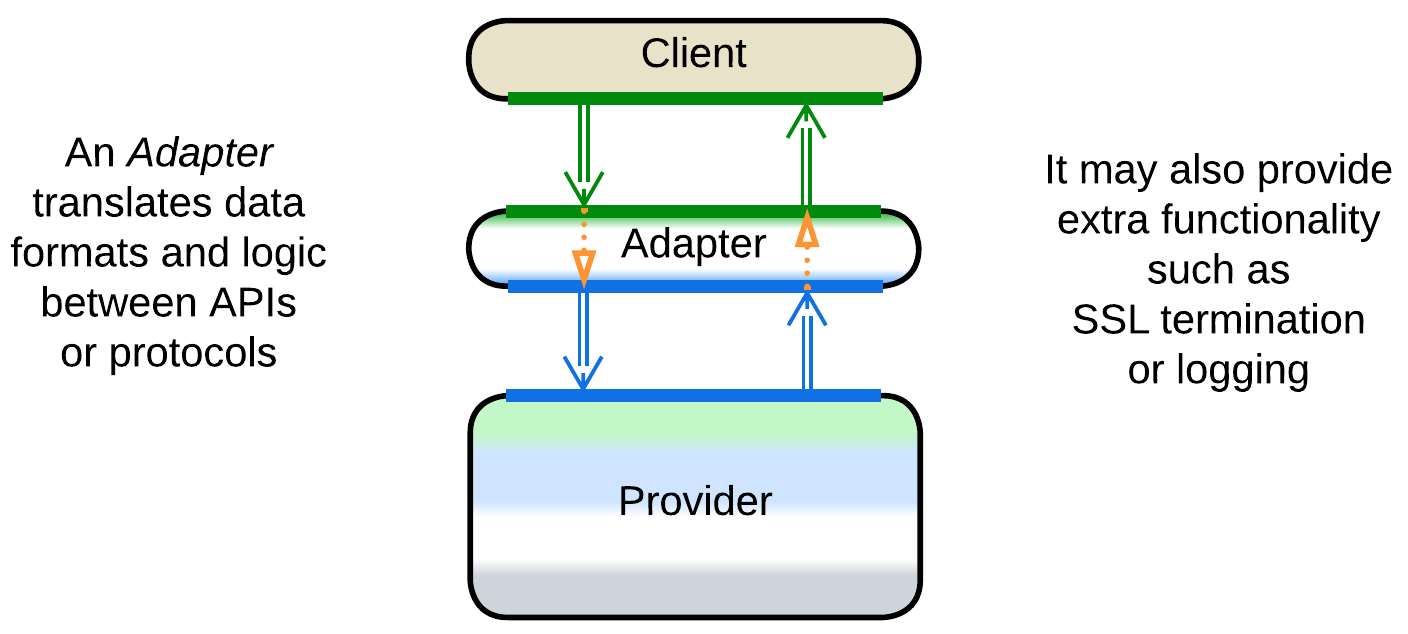

An Adapter [GoF, DDS] is a mostly stateless Proxy that translates between an internal and public protocol and API formats. It may often be co-located with a Reverse Proxy. When it adapts messages, it is called a Message Translator [EIP, POSA4].

As an Adapter adapts in two directions, it is often found between two components (in Hexagonal Architecture) or between a component and Middleware (in Enterprise Service Bus and Service Mesh).

In [DDD], when one component (consumer) depends on another (supplier), there may be an Adapter in between to decouple them. It is called Anticorruption Layer [DDD] when owned by the consumer’s team or Open Host Service [DDD] if the supplier adds it to grant one or more stable interfaces (Published Languages [DDD]).

A Gateway [PEAA] or API Service often implies an Adapter with extra functionality, like Reverse Proxy, authorization and authentication. Cell Gateway is a Gateway for a Cell.

When a Gateway translates a single public API method into several calls towards internal services, it becomes an API Gateway [MP] which is an aggregate of Proxy (for protocol translation) and Orchestrator.

An Adapter between an end-user client (web interface, mobile application, etc.) and the system’s API is often called Backend for Frontend. It decouples the UI from the backend-owned system’s API, giving the teams behind both components freedom to work with less synchronization.

There is also a whole bunch of Adapters that aim to protect the business logic from its environment, the idea which is perfected by Hexagonal Architecture:

- Hardware Abstraction Layer (HAL) hides details of hardware to make the code portable.

- Operating System Abstraction Layer (OSAL) or Platform Abstraction Layer (PAL) abstracts the OS to make the application cross-platform.

- Database Abstraction Layer (DBAL or DAL), Database Access Layer [POSA4] or Data Mapper [PEAA] attempts to help building database-agnostic applications by making all the databases look the same.

- Repository [PEAA, DDD] provides methods to access a record stored in a database as if it were an object in the application’s memory.

An Adapter creates a layer of indirection between your code and a library or service which it uses. If the external component’s interface changes, or you need to substitute the thing with an incompatible implementation from another vendor, and your code accesses the component directly, you will have to make many changes throughout your code. However, if there is an Adapter in-between, your code depends only on the interface of the Adapter. And when the external component changes or is replaced, only the relatively small Adapter’s implementation needs to change while your main code is blessed with ignorance of what lies beyond the Adapter’s borders.

API Gateway #

API Gateway [MP] is a fusion of Gateway (Proxy) and API Composer (Orchestrator). The Gateway aspect encapsulates the external (public) protocol while the API Composer translates the system’s high-level public API methods into multiple (usually parallel) calls to the APIs of internal components, collects the results and conjoins them into a response.

API Gateway is discussed in more detail under Orchestrator.

Evolutions #

It usually makes little sense to get rid of a Proxy once it has been integrated into a system. The only real drawback to using a Proxy is a slight increase in latency for user requests which may be mitigated through the creation of bypass channels between the clients and a service that needs low latency. The other drawback of the pattern, the Proxy being a single point of failure, is countered by deploying multiple instances of the Proxy.

As Proxies are usually third-party products, there is not much we can change about them:

- We can add another kind of a Proxy on top of an existing one.

- We can use a stack of Proxies per client, making Backends for Frontends.

Summary #

A Proxy represents your system to its clients and takes care of some aspects of the communication. It is common to see multiple Proxies deployed sequentially as they are often stackable.